Extended Reality

Indio San @ Envisioning

Distinguishing "Real" from Virtual

From a Zoom background that transports your head and shoulders to a tropical island while on a call to a haptic accessory that lets you feel or see the action when playing a video game. From virtual influencers used to sell clothes to holograms of pop stars performing a live concert for fans. All of these are just some recent ways Extended Reality (XR) has infiltrated our lives, and as we, increasingly, spend more time online, it becomes harder to distinguish “real” life from a life lived digitally.

Extended Reality (XR) covers the full spectrum of real and virtual environments and includes Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR), as well as any other future immersive reality technologies that might be created. In 1994, Paul Milgram defined the Reality-Virtuality Continuum, with the physical world on the one side, and a complete digital or computer-generated environment on the other. We have been hovering somewhere between the two ever since, and as humans experience more machine interfaces, the idea of our reality evolves.

All Realities will Flourish

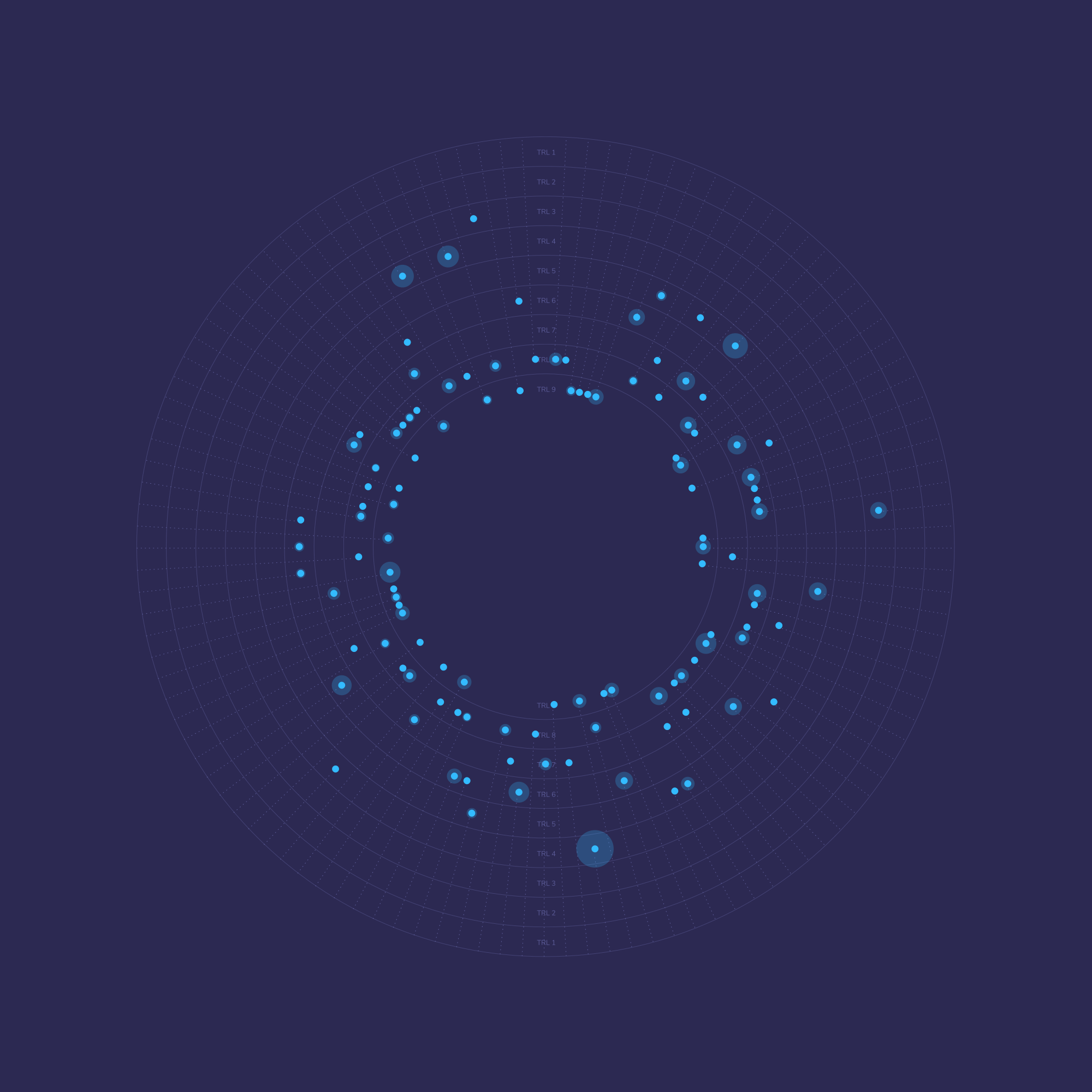

While Extended Reality (XR) is an umbrella term, in order to look closer at the realities within it, we need to gaze at the manifold nuances and variations between the types of XR technologies. Each of these variations does different things and serves different purposes, with the main differences being the levels of immersion and depth of interactivity.

Augmented Reality (AR) is characterized by an overlay of computer graphics within your current environment. Face filters on social media and Pokemon Go are some of the most widespread examples of AR in action.

At the more immersive end of the Reality-Virtuality Continuum, there is Virtual Reality (VR), defined as a first-person perspective within a 3D reality. You, or a version of you (e.g., an Avatar), are taken to a different world, often through a wearable accessory (everything from contact lenses to full haptic suits), where you experience a level of immersion that detaches you from your physical environment.

AR and VR are the inverses of one another. At the same time, Mixed Reality (MR) lies between the two, with an additional layer of complexity compared to AR. It is a place where digital and physical objects coexist and computer-generated objects can interact with physical environments. To note, all MR is AR, but not all AR is MR.

These immersion levels create a virtual domain that is becoming an all-encompassing space, where we can access commerce, education, entertainment, work, and much more without ever having to leave our homes. The concept of "never having to leave our homes" was seen on a much larger scale during the COVID-19 pandemic of 2020 when some of us were told to stay home, which played a role in accelerating the usage of extended reality. To the extent that "to Zoom" became a verb, much like "to Google" before it. This allowed more of us to get more comfortable socializing, working, and carrying out other tasks online that we would have usually done in person. And now, the idea of bringing all these activities together in one digital environment does not seem as far-fetched as it could have just a couple of years ago.

Enter the Metaverse

The metaverse is a shared digital place, bringing together all of our online activities to one single environment. It is the setting where we can present ourselves as our best-imagined "self" or even work together on collaborative projects.

Yet, there is no one single definition of what the metaverse will be in the future. The term first appears in Neal Stephenson’s cyberpunk novel Snow Crash, and there it is a place people went to escape the dreary totalitarian reality of the real world they live in. More recently, in The Matrix movies, it is somewhere machines lay us to rest after we have become their slaves to generate electricity. Even if the term is referenced in many ways, in "real life" it is replicated continually. Currently, big corporations are promoting their versions of metaverses, such as in the case of Facebook selling their platform as the closest reality of extended environments.

However, the (extended) reality an individual experiences is likely to be different from the one you experience; and the one you experience will be different from the next person. What is becoming increasingly hard to ignore is that beyond our individual and cultural differences, it is our access to technology that will define our existence in these realities.

For those who can choose to spend time in sophisticated virtual worlds, the only limit is your imagination. You can have your favorite milkshake from your favorite restaurant just like that, you can have the body you want, or you can have the friends you never had in the physical world. Everything you could desire is there, all the time. So why would you ever want to leave?

This topic perhaps goes some way to explain time compression, a phenomenon not fully understood yet, where it is harder for people to identify how much time has passed when interacting in immersive environments. While, time compression could be seen to be useful in some situations, like enduring an unpleasant medical treatment or passing the time on a long flight. In other circumstances, it could have harmful consequences, especially as VR headsets and accessories get lighter and therefore more comfortable to wear for longer periods of time.

Research on game addiction shows that losing track of time during gameplay can negatively affect a player’s sleep cycle and mood, and this could be further heightened when an individual is experiencing XR. Another possible explanation for time compression is that in immersive worlds, a user has less body awareness, and bodily rhythms are thought to help our brain track the passage of time. Whatever the cause of this phenomenon, a solution is yet to be developed in order to minimize the worst effects of time compression.

What Lies Ahead?

Tech on Prescription

Gaming is perhaps the most recognizable way that people have used digital worlds to relax and unwind. Doctors can now prescribe a video game to treat cognitive dysfunction for ADHD and brain fog caused by COVID-19.

Indeed, scientists at UC San Francisco’s Neuroscape brain research center are using VR video games to boost seniors’ memories. According to Brennan Spiegel, director of one of the largest therapeutic VR programs in the world at the Cedars-Sinai Medical Center in Los Angeles, VR can help lower blood pressure, treat eating disorders and obesity, combat anxiety, cure PTSD, and ease the pain of childbirth. In the near future, Spiegel hopes to see “VR pharmacies” staffed with “virtualists” who administer targeted doses of VR to treat specific maladies."

Travelportation

An immersive sensory experience that brings travelers as close as possible to a physical destination without actually being there is now a reality. In Microsoft's Flight Simulator, what started as an educational tool for new pilots is now being repurposed for tourism, where passengers can gaze the entire world virtually. Whereas, the project Lights over Lapland from Finland has introduced virtual tours, inviting anyone with an XR headset to experience the northern lights, an ice hotel, and sledding trails without having to leave their home.

Methods

Computing approach that allows machines to make sense of the real environment, translating concrete spatial data into the digital realm in real-time. By using machine learning-powered sensors, cameras, machine vision, GPS, and other elements, it is possible to digitize objects and spaces that connect via the cloud, allowing sensors and motors to react to one another, accurately representing the real world digitally. These capabilities are then combined with high-fidelity spatial mapping allowing a computer “coordinator” to control and track objects' movements and interactions as humans navigate through the digital or physical world. Spatial computing currently allows for seamless augmented, mixed and virtual experiences and is being used in medicine, mobility, logistics, mining, architecture, design, training, and so forth.

This computational and mathematical method uses geographic data to support decision-making processes in diverse geographic fields, including natural resource management, land‐use science, political geography, transportation, retailing, and medical geography. Spatial optimization aims to maximize or minimize an objective linked with a problem of geographic nature, such as location-allocation modeling, route selection, spatial sampling and land-use allocation, among others. A spatial optimization problem is composed of decision variables, objective functions, and constraints. Geographic decision variables include spatial and aspatial focuses. The topological and nontopological relations between decision variables like distance, adjacency, patterns, shape, overlap, containment, and space partition are reflected in the objective functions.

The process of creating a three-dimensional representation of any surface or object (inanimate or living) via specialized software. 3D modeling is achieved manually with specialized 3D production software that allows for creating new objects by manipulating and deforming polygons, edges, and vertices or by scanning real-world subjects into a set of data points used for a digital representation. This process is widely used in various industries like film, animation, and gaming, as well as interior design, architecture, and city planning.

A distributed computing method of processing and analyzing data along a network edge, placing the workload closer to the data gathering process. With edge computing, data does not need to be sent to the cloud to be processed and sent back to the device. Edge computing is mounted with machine learning algorithms, enabling decision-making without relying entirely on cloud computing. By allowing for data processing locally, this approach offers low-latency responses while saving bandwidth and improving security, all necessary attributes for industry 4.0 appliances, such as factory machines, autonomous vehicles, and overall IoT ecosystems.

A 3D model-based process mounted on AI-assisted software that enables simulations from physical structures. The 3D model enables the design, simulation, and operation of what-if scenarios through virtual representations. Building Information Modelling depends on the physical and functional characteristics of real assets to provide nearly real-time simulations. The data in the model defines the design elements and establishes behaviors and relationships between model components, so every time an element is changed, visualization is updated. This allows testing different variables such as lighting, energy consumption, structural integrity, and simulating changes on the design before it is actually built. It also supports greater cost predictability, reduces errors, improves timelines, and gives a better understanding of future operations and maintenance.