MENA 4.0: Women

Laura Del Vecchio

Sebastian Svenson @ unsplash.com

Biases are particularly harmful to marginalized and vulnerable groups of society. For example, poverty itself is gendered; men and women experience it differently. The effectiveness of any inequality reduction strategy is directly tied to gender issues. If data is driving society to a tremendous transformation, we must ensure that data recipients are contemplating all individuals and collectives.

💡

What if we had a tool that can help identify bias in data?

The Algorithmic Bias Detection Tool is a technological solution working to detect biases contained in extensive databases. It recommends specific changes to how the mathematical models interpret data, inducing a reparation program through machine learning. This algorithm can examine datasets, and generate reports on the correlation of sensitive variables with the analyzed data, such as evaluating the amount of biased information related to race, gender, class, and religion.

Currently, companies are using Facial Recognition tools to allow recruiters and hiring managers to select candidates automatically depending on their facial expressions. HireVue is a tech company employing AI tools that assess candidates based on job-specific competencies. This solution is, on the one hand, allowing companies to screen and select the most adequate candidates faster, but on the other, it can exclude other candidates based on systematic bias.

By allowing candidates to login into the HireVue interaction interface, users are asked to look into a webcam as the system records their answers to pre-set questions. Applicants can be interviewed using a desktop computer, laptop, smartphone, or tablet. The output then shows if they are compatible with their job application requirements.

Even if apparently efficient, usually, the "standard person" is a man, more precisely, a young Caucasian male; everything divergent is considered atypical. If companies use a fixed standard of "the ideal employee," commonly referred to as corporate unicorns, individuals that do not comply with these standards could be ultimately barred from accessing certain job positions.

As a solution to this matter, Algorithmic Bias Detection Tool could help prevent this from happening.

For example, Gender Shades is a project intended to excavate biases in gender and race in the digital age, working to detect inequality in digital services. The project is based on the concept that automated systems are not inherently neutral and can reflect the coded priorities, preferences, and prejudices of those who have the power to mold artificial intelligence.

Another example is the Bias Analyzer developed by PwC, a tech-enabled service that helps companies proactively identify, monitor, and manage potential risks of bias. This tool is helping companies and businesses follow regulatory risks of algorithmic biases, and analyze systems for hidden prejudices.

Impact on Women

🔍

If Algorithmic Bias Detection Tool becomes mainstream, what impact would it have on this societal group?

According to participants, this tool could help include more marginalized women and generate more gender equal practices in firms, always if the female worker has the skills for the position. Also, they highlighted that it could better target individuals to have equal access to financial services.

More specifically to the MENA region, the impact would be positive, too. This technological solution could build a comprehensive database of possible biases that have been systematically reproduced, thus preventing them from happening again. If used for hiring female workers, it could help leverage possible discrimination and forward more inclusion programs and economic empowerment. Also, by encoding variational “fair” encoders, dynamic upsampling of training data based on learned representations, or preventing disparity through distributional optimization, these tools could possibly help create ethical and transparent principles for developing new AI technologies.

Nevertheless, all technologies have inherent dangers, and mapping them is the first step to minimizing negative impacts. The following table shows the participants' analysis of possible detrimental outcomes produced by this technology.

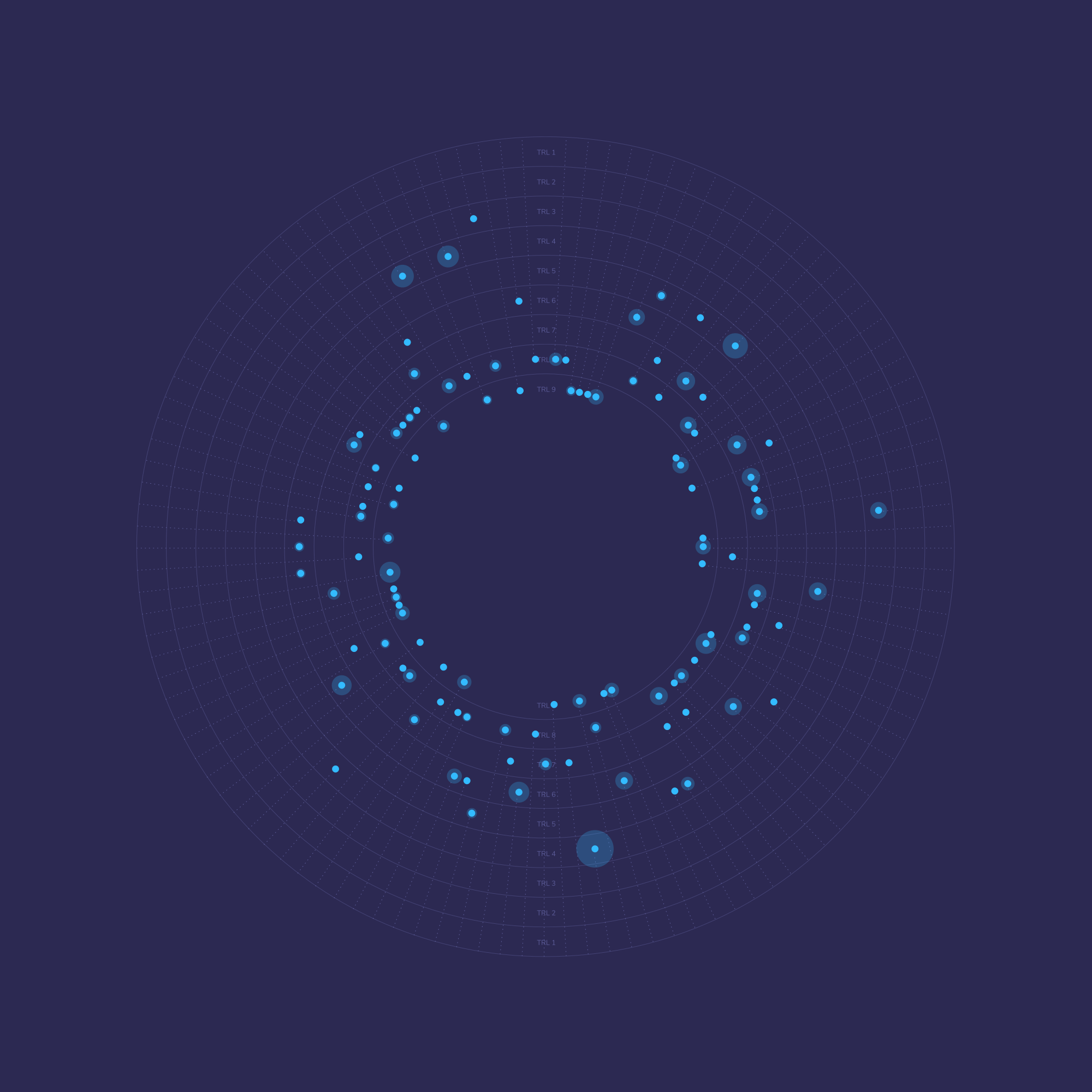

Mapping risks of Algorithmic Bias Detection Tool

Thomaz Rezende @ envisioning.io

Mapping risks of Algorithmic Bias Detection Tool

Thomaz Rezende @ envisioning.io

For generations, the female presence was reserved to the household, limited to taking care of their families and to other duties related to domestic chores. It is only in recent years that women had more democratized access to training, job roles initially occupied by men, and more distributed economic resources. Even though women's access to technologies are exponentially growing and more of these technological tools are being designed by and for women, what happens when these technologies do not reflect the current reality of more marginalized groups of society, such as women?

Years of historical submission and compliance to a male-based society is not easily surpassed, especially when the majority of the available information from previous generations were registered by and destined for men. Any artificial Intelligence application gets more complex by learning from novel inputs added to its algorithmic structure. If the information added only reflects a dominant view of society, the AI system will endure prevailing perspectives systematically.

Future Perspectives

Quantum future

Sebastian Svenson @ unsplash.com

Quantum future

Sebastian Svenson @ unsplash.com

Participants were particularly interested in this topic; on how this technology could potentially exacerbate gaps and historical biases that could harm or negatively target the user. There is still a lot to do to enable women equal admission to technological developments, but there are alternatives to make this move more diverse and inclusive.

A potential solution is to hire diversified personnel of software engineers and data scientists, thus including people from different backgrounds such as gender, race, and age to help broaden the point of view of the algorithms they program.

Ultimately, since many bias-detection procedures can overlap with ethical challenges in different areas, such as structures for good governance, a regulatory board must be established in legal and technical terms to bridge the gap and minimize potential conflicts based on bias and prejudice. Similar to other technologies included in this article, this could ensure inequalities are not evidentiated and biases revoked accordingly, and, if necessary, demand appropriate law enforcement. Otherwise, an unchecked market with access to increasingly powerful predictive tools can gradually and imperceptibly worsen social inequality.